In my last post, I talked about Network IO Control. I think it’s better for me to discuss another interesting function of new vSphere 4.1, Storage IO Control.

Storage IO Control is really something easy to setup and leave difficult jobs to Vmware operation. However, there are quite bit information on the Internet, I’m trying to put them together and explain it to you in an easy way.

What is Storage IO Control ?

Storage I/O Control (SIOC), a new feature offered in VMware vSphere 4.1, provides a fine-grained storage control mechanism by dynamically allocating portions of hosts’ I/O queues to VMs running on the vSphere hosts based on shares assigned to the VMs. Using SIOC, vSphere administrators can mitigate the performance loss of critical workloads during peak load periods by setting higher I/O priority (by means of disk shares) to those VMs running them. Setting I/O priorities for VMs results in better performance during periods of congestion.

There are some misunderstanding here. Some people say SIOC will only kick in when threshold is breached. I believe SIOC will always work for you at all the time to make sure datastore latency close to the congestion threshold if you enable this feature.

Prerequisites of Storage IO Control

- Datastores that are Storage I/O Control-enabled must be managed by a single vCenter Server system.

- Storage I/O Control is supported on Fibre Channel-connected and iSCSI-connected storage. NFS datastores and Raw Device Mapping (RDM) are not supported.

- Storage I/O Control does not support datastores with multiple extents. (We should try to avoid extend volumes to multiple datastore in all time and also try to use consistent block size for VAAI sake)

- Before using Storage I/O Control on datastores that are backed by arrays with automated storage tiering capabilities, check the VMware Storage/SAN Compatibility Guide to verify whether your automated tiered storage array has been certified to be compatible with Storage I/O Control. (I believe the latest EMC F.A.S.T is upported with this function. But you have to wait for latest FLARE 30 to make it work).

- All ESX Hosts connecting to the datastore which you want to use SIOC must be ESX 4.1. (You can’t enable SIOC while you have ESX 4.0 connecting to that SIOC datastore). Of course, you need to have vCenter 4.1 as well.

- Last but not least, you need to have Enterprise Plus license in terms of enable this function. 😦

How does Storage IO Control work?

There are quite few blogs regarding this topic. Essentially, you can setup share level for each single VM (actually, you setup for each VM disk) and apply limits if you have to. Those values will be used when SIOC is operating. Please be aware that SIOC doesn’t just monitor a single point and adjust a single value to make your latency lower than dedicate thershold. It actually change multiple layers of IO flow to make it happen ( I will explain it later).

Before Storage IO Control appears

Let me quote Scott Drummonds article to explain the difference between previous disk share control and SIOC.

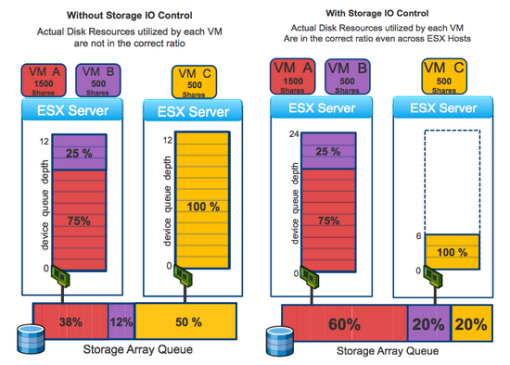

The fundamental change provided by SIOC is volume-wide resource management. With vSphere 4 and earlier versions of VMware virtualization, storage resource management is performed at the server level. This means a virtual machine on its own ESX server gets full access to the device queue. The result is unfettered access to the storage bandwidth regardless of resource settings, as the following picture shows.

This is from Yellow-brick:

As the diagrams clearly shows, the current version of shares are on a per Host basis. When a single VM on a host floods your storage all other VMs on the datastore will be effected. Those who are running on the same host could easily, by using shares, carve up the bandwidth. However if that VM which causes the load would move a different host the shares would be useless. With SIOC the fairness mechanism that was introduced goes one level up. That means that on a cluster level Disk shares will be taken into account.

As far as I believe, SIOC starting point is no long only manage a single host IOPS, instead, it monitors data-store wide level, Host HBA queue and VM IOPS together to dynamically monitor and adjust IO. If the flag is up, SIOC will come down and adjust multiple layers IO to make sure high disk share VMs are prioritized.

Monitoring and adjusting points:

Each VM access to it’s Host’s I/O queue. SIOC must make sure it’s base on I/O priority of VM (disk shares).

Each Logical device I/O queue of each host. The lower limit is 4 and Upper limit is minimum of (queue depth set by SIOC and queue depth set in the HBA driver ).

SIOC monitors the usage of device queue in each host, aggregate I/O requests per second from each host (be aware this is aggregation of I/O numbers and per I/O package size and divide by seconds)and datastore-wide I/O latency every 4 seconds (for individual datastore). Also throttles the device queue in each host.

How to setup Storage I/O control?

Let what I have mentioned above, it’s fairly easy to setup as long as you go through prerequisite list.

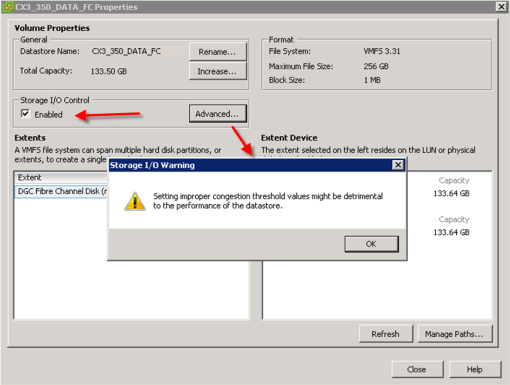

All what you need to do is to tick that box.

If you try to customize threshold, you can click Advanced button.

In terms of seeing SIOC is actually working, please go to vCenter->Datastores->select your storage->Performance. You can see none of VM disks have more than 30ms latency. (sorry, there is no work load in the picture).

Reference:

http://vpivot.com/2010/05/04/storage-io-control/

http://www.yellow-bricks.com/2010/06/17/storage-io-control-the-movie/

http://www.vmware.com/files/pdf/techpaper/vsp_41_perf_SIOC.pdf

5 Comments

Here is a script that helps automate SIOC changes, else you would have to manually use the vSphere Client to make this change on each and every datastore

http://www.virtuallyghetto.com/2010/07/script-automate-storage-io-control-in.html

–William

Undeniably imagine that that you said. Your favourite reason seemed to be on the web the simplest factor to take into accout of. I say to you, I definitely get annoyed at the same time as other people consider issues that they just don’t understand about. You managed to hit the nail upon the top and also defined out the entire thing with no need side-effects , people could take a signal. Will likely be back to get more. Thanks

how do i track the performance after enabled the SIOC?

Reblogged this on Raydha's Blog and commented:

good article!

Hi there! I just wish to give you a big thumbs up for the great info you have right here on this post. I will be returning to your website for more soon.

5 Trackbacks/Pingbacks

[…] This post was mentioned on Twitter by Eric Sloof, Silver Chen. Silver Chen said: Vmware vSphere 4.1 Storage IO Control (SIOC) understanding: http://wp.me/pVbEv-65 […]

[…] vSphere 4.1 Hidden Gem: Host Affinity Rules (Latoga Labs) USB passthrough in vSphere 4.1 (vStorage) VMware vSphere 4.1 Storage IO Control (SIOC) understanding (GeekSilver’s […]

[…] Vmware vSphere 4.1 Storage IO Control (SIOC) understanding July 2010 3 comments 3 […]

[…] Vmware vSphere 4.1 Storage IO Control (SIOC) explained https://geeksilver.wordpress.com/2010/07/28/vmware-vsphere-4-1-storage-io-control-sioc-understanding/ […]

[…] https://geeksilver.wordpress.com/2010/07/28/vmware-vsphere-4-1-storage-io-control-sioc-understanding/ […]